Quadratic forms, local minimizers, and a cool relationship between the two

This semester, I am diverging a bit from the usual Aerospace Engineering workload with a math class that I had been eyeing for a while: MATH 467, Theory and Computational Methods of Optimization. It is no surprise that this interests me - half of designing airplanes is optimizing them, and I’ve found a great passion in modelling/simulations for trade studies and design space investigations in the early conceptual design phase.

What I underestimated is the “MATH” part of “MATH 467.” This course is quite theory heavy, with a less pronounced “computational” part as I had hoped for. However, I’ve been having an awful lot of fun, and sometime ago a friend and I had a small lightbulb moment that I thought I’d share. It is not much per se, just a cool little insight. But first, a bit of linear algebra…

Quadratic Forms

During the first couple of weeks, the class was a review of linear algebra. This has become the subject that fascinates me the most: even the most simple definitions have geometric interpretations that give a lot of insight into other areas of the subject. We reviewed one thing, however, that I had never come across before: quadratic forms.

A quadratic form is simply a function of the form:

Where is a symmetric matrix. Note the domain and range in the function here: takes in a vector and outputs a real number .

These are called quadratic forms because the polynomial will be of second degree. More accurately, the polynomial itself is in quadratic form, and it just so happens that we can write it in the form for a symmetric matrix .

Another quadratic form fun fact: there is no loss of generality in demanding that be symmetric, because you can always let:

Which guarantees and:

A simple example in 3D gives some more insight into what this truly means. Consider the matrix :

We can find its quadratic form simply by the definition:

Multiplying these out yields:

We thus see that the matrix simply holds the coefficients to the quadratic polynomial ! It also becomes clear why there is no loss of generality when we take the “average” matrix, that is, half of the sum of the matrix and its transpose. The new matrix will have equal diagonal (since the transpose’s diagonal doesn’t change, and adding then halving the same entries will just give us the original entries.) The “cross” terms, that is, when , will be added together and halved. However, their sum appears in the quadratic expansion. Take the term, for example:

So the quadratic form is preserved.

At this point, I thought to myself: “cool.” But also… where is this used? Turning to my friend and asking this question, he said “apparently everywhere.” And he was right! Quadratic forms are incredibly important in statistics, mechanics, and many other areas of mathematics. For the purpose of this class, they are also incredibly important: they show up all the time in optimization. This post will explore one example of such appearance.

But first, another concept that is almost equally important to the quadratic form itself: its definiteness.

Definiteness of a Quadratic Form

A quadratic form can be deemed positive definite, positive semidefinite, negative definite, or negative semidefinite.

A quadratic form is positive definite if:

And it is positive semidefinite if:

In words: f is positive definite if it is positive for all nonzero vectors , and positive semidefinite if it is positive or zero for all vectors . Negative definite and semidefinite forms are defined similarly (in terms of the strict inequality and nullity or otherwise of possible .)

There is a nice method for determining whether the quadratic form of a matrix is positive definite called Sylvester’s Criterion. It states that A matrix is positive definite if and only if all of its leading principal minors are nonnegative.

This was another new one to me - what are leading principal minors? The definition might seem loose but is visually pretty satisfying: these are the determinants of the matrices obtained by successively removing the last row and column.

Back to our example:

Let’s successively remove the last rows and columns, and let’s call these submatrices . Before we even begin, the determinant of matrix itself is a minor:

Removing the last row and column, we get the next minor:

Removing the last row and column, we get the last minor:

Which is, of course, just . From Sylvester’s Criterion, therefore, is positive definite if and only if:

This can come in quite handy!

Ok, before we talk about minimizers, there is one last thing to be introduced.

Multivariate Taylor Expansion

For a single variable function ., the Taylor expansion about is the very familiar polynomial:

However, we now want to consider the case where . For this case, let’s consider instead the Taylor expansion about of .

The linear approximation is familiar:

This is analogous to the single variate case with a small difference: . In other words, the first derivative is now an n-dimensional vector:

The transpose of this row vector is the gradient vector , that is, .

Now, here’s the nice part: for the second order approximation, we write:

This is analogous to the single variate case with two differences: and is called the Hessian matrix, sometimes denoted :

Because so the Hessian is symmetric and:

is, you guessed it, a quadratic form!

More precisely, the Hessian is symmetric if f is twice continuously differentiable at x, a fact known as Clairaut’s Theorem. For example, the function:

Has Hessian equal to:

The insight from this blog post really lies in this fact: one of the reasons quadratic forms are so important in optimization is because the Taylor expansion of functions in that are at least twice continuous and differentiable (i.e. ) features a quadratic form where the coefficient matrix is none other than the Hessian of . And as it turns out, several optimization algorithms, namely gradient-based methods, make extensive use of the Taylor approximation about points in multivariate functions!

Feasible Directions

There are two last concepts before we get into examples that show the usefulness of quadratic forms in determining whether a point is a minimizer. The first one is a feasible direction.

Some problems might constrain our functions to a set . To deal with points that might lie on the boundary of that constraint, we need the notion of a feasible direction, which will come in handy. Let . A vector is a feasible direction at if there exists some such that:

In words: is a feasible direction if there is some real number wherein you can march in the direction of by and still remain in the set.

From this definition, another useful tool comes in: the directional derivative! In calculus, we learn this as the rate of change of the function in some direction. For multivariate functions, we can define it more generally as:

is the directional derivative of at in the direction of . Note that this is the inner product between the direction and the gradient, so if , the inner product gives the rate of change of in the direction of at point .

Necessary Conditions for Local Minimizers

Ok, last concept: first and second order necessary conditions! These refer to conditions that must be satisfied so that a point (a subset of ) is a potential local minimizer.

The first order necessary condition states that if a point is a local minimizer of over , then:

For every feasible direction . In words: if a point is a local minimizer, then the directional derivative for all feasible directions at that point must be positive. There is some intuition here: everywhere you look, in the directions where you stay within the constraint of your function, the function grows - therefore, you could be at a minimum!

If the point is an interior point then we can say that if is a local minimizer:

This corollary has a nice proof: If a point is interior, then the set of feasible directions is all of because for any direction, you can find a small enough such that remains in . Therefore, if is a local minimizer, then . However, if we look in the opposite direction, we also have , so it must be true that:

And therefore:

*️⃣ This is the First Order Necessary Condition (FONC)

But having for every feasible direction is not enough! The second order necessary condition states that if a point is a local minimizer, is a feasible direction, and , then:

for all feasible directions, and where is the Hessian at .

*️⃣ This is the Second Order Necessary Condition (FONC)

Finally, look at that: this is a quadratic form! The function will satisfy the second order necessary condition if the Hessian is positive semidefinite. It also follows that if the Hessian is positive definite (checkable with Sylvester’s Criterion) and all other conditions are met, the second order necessary condition is met.

In fact, we can say something a bit better if the Hessian is positive definite. If is an interior point, (FONC is met) and the Hessian is positive definite, that is, , then is a strict local minimizer! This is known as the Second Order Sufficient Condition (SOSC) because we conclude that the point is a local minimizer from a set of conditions (sufficient) instead of implying a set of conditions from the fact that the point is a local minimizer (necessary.)

That’s it! Some examples:

Example: First Order Necessary Condition

Consider the function:

Subject to the following constraint:

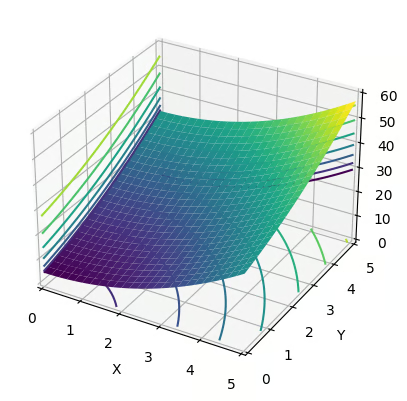

Note how we are dealing with a multivariate function, so gradients are vectors and Hessians are matrices! Visualizing the function:

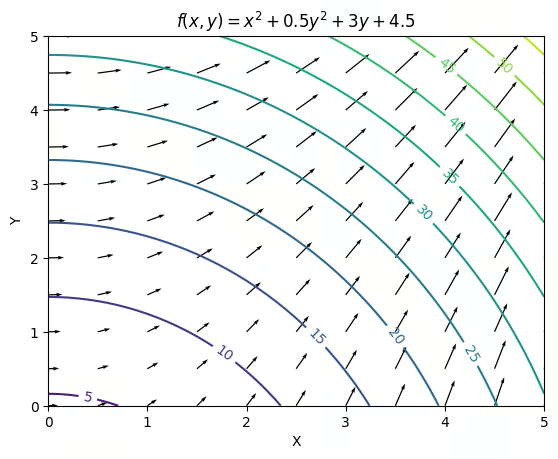

Shows how it behaves in the interval. Plotted above are the level sets in each plane. Most important for us, of course, are the level sets for , which is on the “xy” plane.

Visually, it is easy to see how [0,0] is the only minimizer. Below are the gradients plotted over the level set:

Notice that at any interior point, there exists a feasible direction that points away from the gradient, hence and does not satisfy the FONC.

Point [0,0] on the other hand does. Let’s compute the gradient:

Because we are on the boundary, it does not suffice to say . Instead, we must have:

At [0,0], is simply . However, for to be feasible we demand both , so for sure at .

Example: Second Order Necessary Condition

Consider the function:

Let’s try to find minimizers! This function’s minimizers must satisfy the first and second order necessary conditions. Taking its gradient and Hessian yields:

To satisfy the FONC, we solve:

Which yields the single point:

Which is our suspect. Let’s use our definiteness tool for the Hessian to verify the second order necessary and sufficient conditions. From Sylvester’s Criterion:

So the Hessian is neither positive definite nor positive semidefinite. In fact, it is indefinite. Because this fails the SONC, and it is the only point that could be a local minimizer (satisfies FONC,) the function does not have any minimizers. Sad day for .

Example: A bit of trouble!

Here’s an example where the FONC is a bit useless unless you rethink the problem a bit. Consider the problem of minimizing in subject to the following set constraint:

Let’s try to find minimizers. The FONC is:

For all feasible directions. However, there are no feasible directions in any x! Here’s why: The set is the edge of a unit circle. From any point in the circle, you cannot choose a direction where you remain on the edge along all points in the line you march. Sure, you can march towards another opposite edge. However, there would exist an such that . This would correspond to an that lands you inside the circle, not on the edge. You could also pick the tangent to the point where you are in. However, marching some small step along that tangent would put you very close but not quite on the edge.

Because of this, every single point in the set satisfies the FONC. Notice how the FONC here is not useful at all for narrowing down local minimizer candidates.

We can rephrase this problem, however. If we parametrize the points along with:

We can use the FONC with respect to instead. Now, , so we minimize the composite function . Its gradient by the chain rule is:

But . The FONC is now applied to instead!

Conclusion

Though I digressed a bit, the insight here is that tools from linear algebra that might seem irrelevant and simplistic pop up all the time in applications. I spared this post from a motivation into why this is all so important, but it is easy to see how applicable this is to engineering design optimization. Even the most simple devices can have unexpected optimization problems.

3Blue1Brown has a fantastic video on Newton’s Method and how Newton’s Fractal appears in it. He motivates the viewer with a computer graphics example: how can a point know whether it must be colored white or black if that decision is based off of its distance from a curve of known geometry? It is easy to see here that any point on the screen might want to solve the problem of minimizing the distance between its position and the curve.

Of course, a lot of the applications of the theories above are more in the field of numerical methods - questions such as how do you numerically compute the gradient and Hessians when their closed-form are not known - but the foundations are simple yet extremely insightful ideas as the ones shown above.

Anyways, hope you enjoyed it!